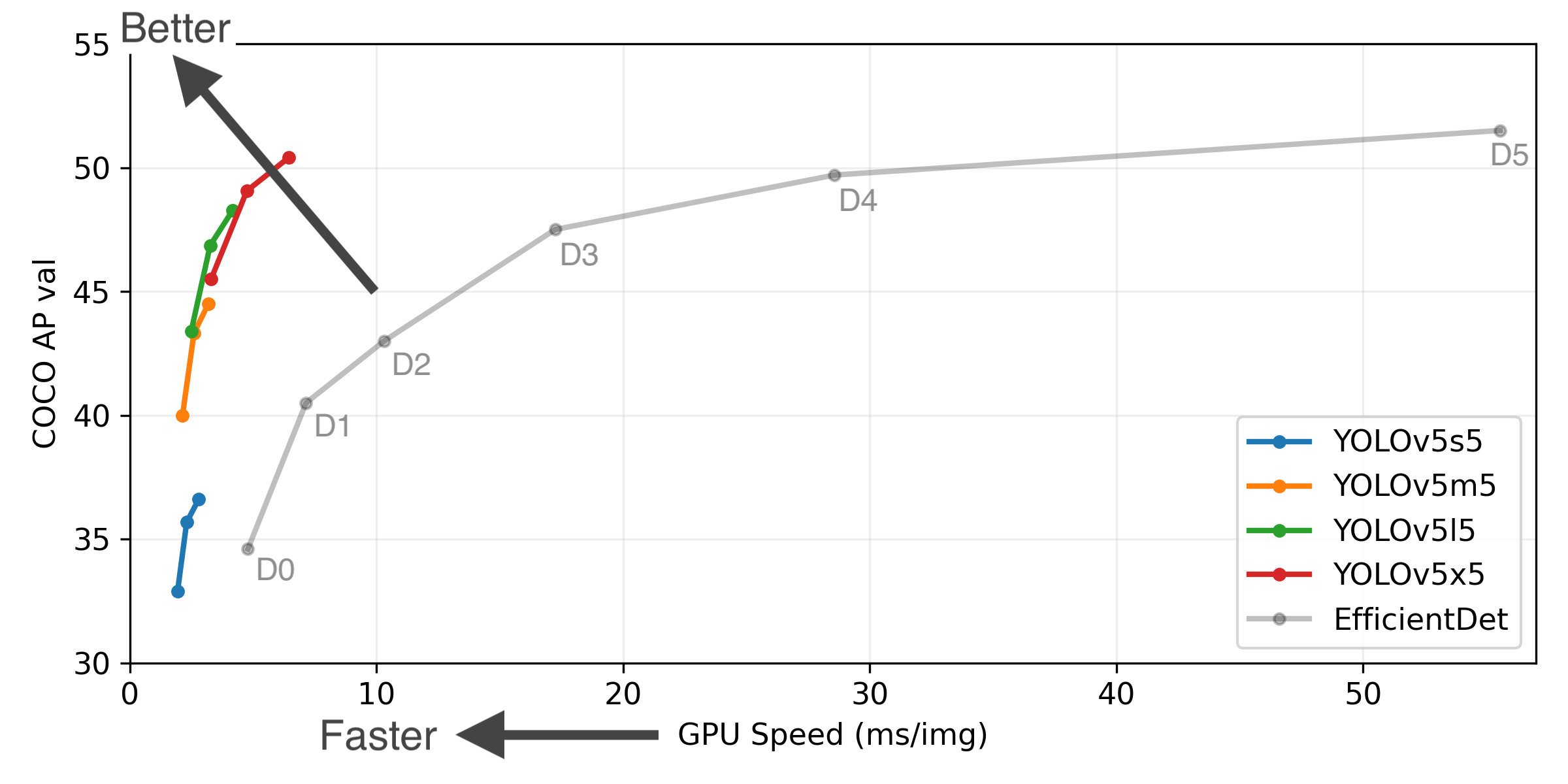

YOLOv5 🚀 is a family of object detection architectures and models pretrained on the COCO dataset, and represents Ultralytics open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development. YOLOv5🚀是一个对象检测架构和模型家族,预训练在COCO数据集上,并代表了Ultralytics的开源研究到未来的视觉AI方法,整合了经验教训和在数千小时的研究和开发的最佳实践。